Use the FPGA Fast Path to Building High-Performance Power-Efficient Edge AI Applications

Contributed By DigiKey's North American Editors

2021-01-12

Designers looking to implement artificial intelligence (AI) algorithms on inference processors at the edge are under constant pressure to lower power consumption and development time, even as processing demands increase. Field programmable gate arrays (FPGAs) offer a particularly effective combination of speed and power efficiency for implementing the neural network (NN) inference engines required for edge AI. For developers unfamiliar with FPGAs, however, conventional FPGA development methods can seem complex, often causing developers to turn to less optimal solutions.

This article describes a simpler approach from Microchip Technology that lets developers bypass traditional FPGA development to create trained NNs using FPGAs and a software development kit (SDK), or use an FPGA-based video kit to move immediately into smart embedded vision application development.

Why use AI at the edge?

Edge computing brings a number of benefits to Internet of Things (IoT) applications in segments as varied as industrial automation, security systems, smart homes, and more. In an Industrial IoT (IIoT) application targeting the factory floor, edge computing can dramatically improve response time in process control loops by eliminating roundtrip delays to cloud-based applications. Similarly, an edge-based security system or smart home door lock can continue to function even when the connection to the cloud is lost accidentally or intentionally. In many cases, the use of edge computing in any of these applications can help lower overall operating cost by reducing the product's reliance on cloud resources. Rather than face an unexpected need for additional expensive cloud resources as demand for their products increases, developers can rely on local processing capabilities built into their products to help maintain more stable operating expenses.

The rapid acceptance and increased demand for machine learning (ML) inference models dramatically amplifies the importance of edge computing. For developers, local processing of inference models helps reduce response latency and costs of cloud resources required for cloud-based inference. For users, the use of local inference models adds confidence that their products will continue to function despite occasional loss of Internet connectivity or changes in the product vendor's cloud-based offerings. In addition, concerns about security and privacy can further drive the need for local processing and inference to limit the amount of sensitive information being transferred to the cloud over the public Internet.

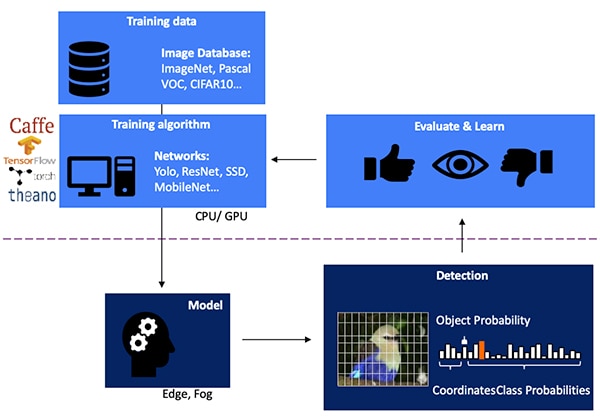

Developing an NN inference model for vision-based object detection is a multistep process starting with model training, typically performed on a ML framework such as TensorFlow using publicly available labeled images or custom labeled images. Because of the processing demands, model training is typically performed with graphics processing units (GPUs) in the cloud or another high-performance computing platform. After training completes, the model is converted to an inference model able to run on edge or fog computing resources and deliver the inference results as a set of object class probabilities (Figure 1).

Figure 1: Implementing an inference model for edge AI lies at the end of a multistep process requiring training and optimization of NNs on frameworks using available or custom training data. (Image source: Microchip Technology)

Figure 1: Implementing an inference model for edge AI lies at the end of a multistep process requiring training and optimization of NNs on frameworks using available or custom training data. (Image source: Microchip Technology)

Why inference models are computationally challenging

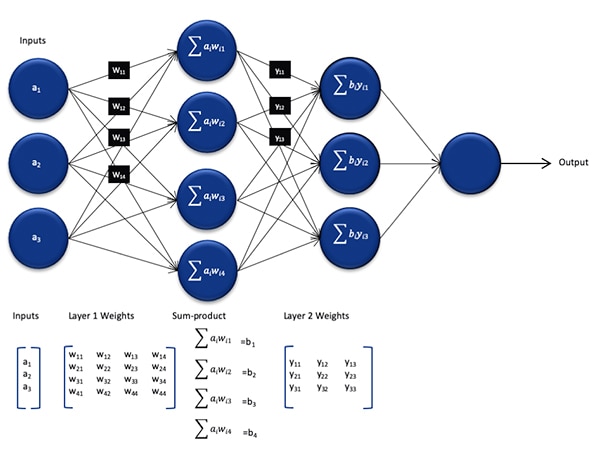

Although reduced in size and complexity compared to the model used during the training process, an NN inference model still presents a computational challenge for general purpose processors due to the large number of calculations it requires. In its generic form, a deep NN model comprises multiple layers of sets of neurons. Within each layer in a fully connected network, each neuron nij needs to compute the sum of products of each input with an associated weight wij (Figure 2).

Figure 2: The number of calculations required for inference with an NN can impose a significant computational workload. (Image source: Microchip Technology)

Figure 2: The number of calculations required for inference with an NN can impose a significant computational workload. (Image source: Microchip Technology)

Not shown in Figure 2 is the additional computational requirement imposed by the activation function that modifies the output of each neuron by mapping negative values to zero, mapping values greater than 1 to 1, and similar functions. The output of the activation function for each neuron nij serves as the input to the next layer i+1, continuing in this fashion for each layer. Finally, the output layer of the NN model produces an output vector representing the probability that the original input vector (or matrix) corresponds to one of the classes (or labels) used during the supervised learning process.

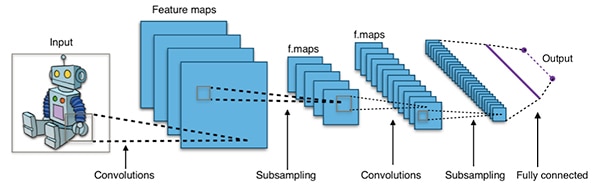

Effective NN models are built with architectures that are much larger and more complex than the representative generic NN architecture shown above. For example, a typical convolutional NN (CNN) used for image object detection applies these principles in a piecewise fashion, scanning across the width, height and color depth of an input image to produce a series of feature maps that finally yield the output prediction vector (Figure 3).

Figure 3: CNNs used for image object detection involve large numbers of neurons in many layers, imposing greater demand on the compute platform. (Image source: Aphex34 CC BY-SA 4.0)

Figure 3: CNNs used for image object detection involve large numbers of neurons in many layers, imposing greater demand on the compute platform. (Image source: Aphex34 CC BY-SA 4.0)

Using FPGAs to accelerate NN math

Although a number of options continue to emerge for executing inference models at the edge, few alternatives provide an optimal blend of flexibility, performance and power efficiency needed for practical high-speed inference at the edge. Among readily available alternatives for edge AI, FPGAs are particularly effective because they can provide high-performance hardware-based execution of compute-intensive operations while consuming relatively little power.

Despite their advantages, FPGAs are sometimes bypassed due to a traditional development flow that can be daunting to developers without extensive FPGA experience. To create an effective FPGA implementation of an NN model generated by an NN framework, the developer would need to understand the nuances of converting the model into register transfer language (RTL), synthesizing the design, and working through the final place and route physical design stage to produce an optimized implementation (Figure 4).

Figure 4: To implement an NN model on an FPGA, developers have until now needed to understand how to convert their models to RTL and work through the traditional FPGA flow. (Image source: Microchip Technology)

Figure 4: To implement an NN model on an FPGA, developers have until now needed to understand how to convert their models to RTL and work through the traditional FPGA flow. (Image source: Microchip Technology)

With its PolarFire FPGAs, specialized software, and associated intellectual property (IP), Microchip Technology provides a solution that makes high-performance, low-power inference at the edge broadly available to developers without FPGA experience.

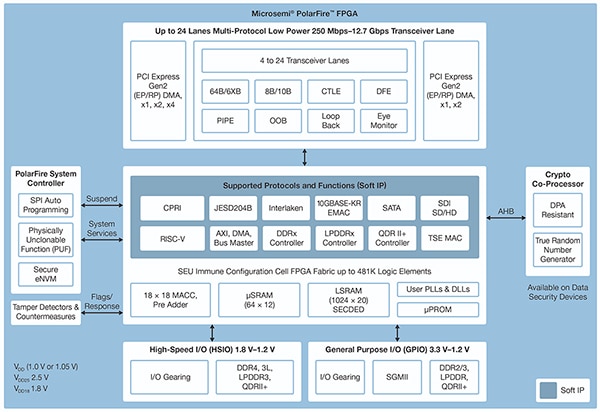

Fabricated in an advanced non-volatile process technology, the PolarFire FPGAs are designed to maximize flexibility and performance while minimizing power consumption. Along with an extensive array of high-speed interfaces for communications and input/output (I/O), they feature a deep FPGA fabric able to support advanced functionality using soft IP cores, including RISC-V processors, advanced memory controllers, and other standard interface subsystems (Figure 5).

Figure 5: The Microchip Technology PolarFire architecture provides a deep fabric designed to support high-performance design requirements including compute-intensive inference model implementation. (Image source: Microchip Technology)

Figure 5: The Microchip Technology PolarFire architecture provides a deep fabric designed to support high-performance design requirements including compute-intensive inference model implementation. (Image source: Microchip Technology)

The PolarFire FPGA fabric provides an extensive set of logic elements and specialized blocks, supported in a range of capacities by different members of the PolarFire FPGA family, including the MPF100T, MPF200T, MPF300T, and MPF500T series (Table 1).

|

Table 1: A variety of FPGA fabric features and capacities are available in the PolarFire series. (Table source: DigiKey, based on Microchip Technology PolarFire datasheet)

Among the features of particular interest for inference acceleration, the PolarFire architecture includes a dedicated math block that provides an 18-bit × 18-bit signed multiply-accumulate function (MAC) with a pre-adder. A built-in dot product mode uses a single math block to perform two 8-bit multiply operations, providing a mechanism to increase capacity by taking advantage of the negligible impact of model quantization on accuracy.

In addition to accelerating the mathematical operations, the PolarFire architecture helps relieve the kind of memory congestion encountered when implementing inference models on general purpose architectures, such as small distributed memories for storing intermediate results created during NN algorithm execution. Also, an NN model's weights and bias values can be stored in a 16 deep by 18-bit coefficient read-only memory (ROM) built from logic elements located near the math block.

Combined with other PolarFire FPGA fabric features, math blocks provide the foundation for Microchip Technology's higher level CoreVectorBlox IP. This serves as a flexible NN engine able to execute different types of NNs. Along with a set of control registers, the CoreVectorBlox IP includes three major functional blocks:

- Microcontroller: A simple RISC-V soft processor that reads the Microchip firmware binary large object (BLOB) and the user's specific NN BLOB file from external storage. It controls overall CoreVectorBlox operations by executing instructions from the firmware BLOB.

- Matrix processor (MXP): A soft processor comprising eight 32-bit arithmetic logic units (ALUs) and designed to perform parallel operations on data vectors using elementwise tensor operations, including add, sub, xor, shift, mul, dotprod, and others, using mixed 8-bit, 16-bit and 32-bit precision, as needed.

- CNN accelerator: Accelerates MXP operations using a two-dimensional array of MAC functions implemented using math blocks and operating with 8-bit precision.

A complete NN processing system would combine a CoreVectorBlox IP block, memory, memory controller, and a host processor, such as the Microsoft RISC-V (Mi-V) software processor core (Figure 6).

Figure 6: The CoreVectorBlox IP block works with a host processor such as Microchip's Mi-V RISC-V microcontroller to implement an NN inference model. (Image source: Microchip Technology)

Figure 6: The CoreVectorBlox IP block works with a host processor such as Microchip's Mi-V RISC-V microcontroller to implement an NN inference model. (Image source: Microchip Technology)

In a video system implementation, the host processor would load the firmware and network BLOBs from system flash memory and copy them into double data rate (DDR) random access memory (RAM) for use by the CoreVectorBlox block. As video frames arrive, the host processor writes them into DDR RAM and signals the CoreVectorBlox block to begin processing the image. After it runs the inference model defined in the network BLOB, the CoreVectorBlox block writes the results, including image classification, back into DDR RAM for use by the target application.

Development flow simplifies NN FPGA implementation

Microchip shields developers from the complexity of implementing an NN inference model on PolarFire FPGAs. Instead of dealing with the details of the traditional FPGA flow, NN model developers work with their NN frameworks as usual and load the resulting model into Microchip Technology's VectorBlox Accelerator Software Development Kit (SDK). The SDK generates the required set of files including those needed for the normal FPGA development flow and the firmware and network BLOB files mentioned earlier (Figure 7).

Figure 7: The VectorBlox Accelerator SDK manages the details of implementing an NN model on an FPGA, automatically generating files needed to design and run the FPGA-based inference model. (Image source: Microchip Technology)

Figure 7: The VectorBlox Accelerator SDK manages the details of implementing an NN model on an FPGA, automatically generating files needed to design and run the FPGA-based inference model. (Image source: Microchip Technology)

Because the VectorBlox Accelerator SDK flow overlays the NN design onto the NN engine implemented in the FPGA, different NNs can run on the same FPGA design without the need to redo the FPGA design synthesis flow. Developers create C/C++ code for the resulting system and are able to switch models within the system on the fly or run models simultaneously using time slicing.

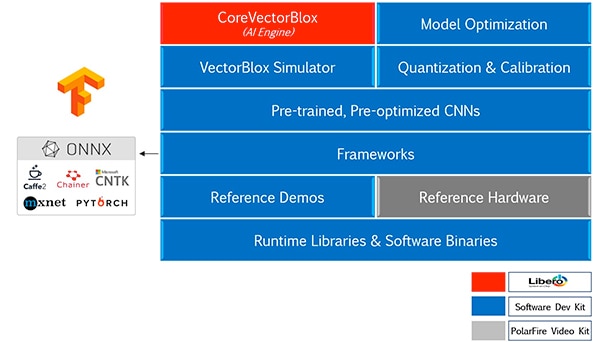

The VectorBlox Accelerator SDK melds the Microchip Technology Libero FPGA design suite with a comprehensive set of capabilities for NN inference model development. Along with model optimization, quantization, and calibration services, the SDK provides an NN simulator that lets developers use the same BLOB files to evaluate their model prior to their use in the FPGA hardware implementation (Figure 8).

Figure 8: The VectorBlox Accelerator SDK provides a comprehensive set of services designed to optimize FPGA implementation of framework-generated inference models. (Image source: Microchip Technology)

Figure 8: The VectorBlox Accelerator SDK provides a comprehensive set of services designed to optimize FPGA implementation of framework-generated inference models. (Image source: Microchip Technology)

The VectorBlox Accelerator SDK supports models in Open Neural Network Exchange (ONNX) format as well as models from a number of frameworks including TensorFlow, Caffe, Chainer, PyTorch, and MXNET. Supported CNN architectures include MNIST, MobileNet versions, ResNet-50, Tiny Yolo V2, and Tiny Yolo V3. Microchip is working to expand support to include most networks in the open-source OpenVINO toolkit open model zoo of pre-trained models, including Yolo V3, Yolo V4, RetinaNet, and SSD-MobileNet, among many others.

Video kit demonstrates FPGA inference

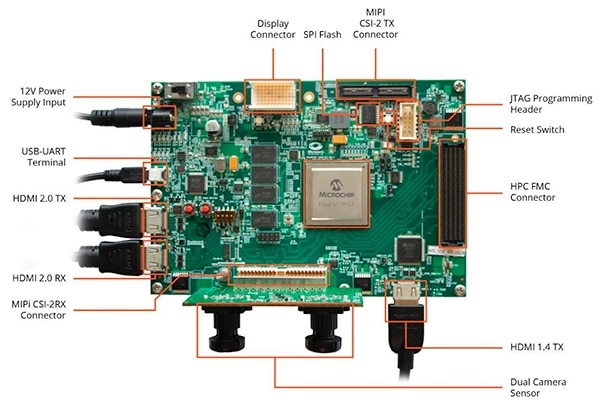

To help developers move quickly into smart embedded vision application development, Microchip Technology provides a comprehensive sample application designed to run on the company's MPF300-VIDEO-KIT PolarFire FPGA Video and Imaging Kit and reference design.

Based on the Microchip MPF300T PolarFire FPGA, the kit's board combines a dual camera sensor, double data rate 4 (DDR4) RAM, flash memory, power management, and a variety of interfaces (Figure 9).

Figure 9: The MPF300-VIDEO-KIT PolarFire FPGA Video and Imaging Kit and associated software provide developers with a quick start to FPGA-based inference in smart embedded vision applications. (Image source: Microchip Technology)

Figure 9: The MPF300-VIDEO-KIT PolarFire FPGA Video and Imaging Kit and associated software provide developers with a quick start to FPGA-based inference in smart embedded vision applications. (Image source: Microchip Technology)

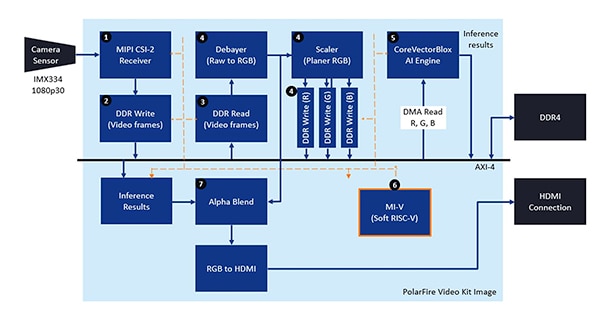

The kit comes with a complete Libero design project used to generate the firmware and network BLOB files. After programming the BLOB files into on-board flash memory, developers click on the run button in Libero to start the demonstration, which processes video images from the camera sensor and displays inference results on a display (Figure 10).

Figure 10: The Microchip Technology PolarFire FPGA Video and Imaging Kit demonstrates how to design and use an FPGA implementation of a smart embedded vision system built around the Microchip CoreVectorBlox NN engine. (Image source: Microchip Technology)

Figure 10: The Microchip Technology PolarFire FPGA Video and Imaging Kit demonstrates how to design and use an FPGA implementation of a smart embedded vision system built around the Microchip CoreVectorBlox NN engine. (Image source: Microchip Technology)

For each input video frame, the FPGA-based system executes the following steps (with step numbers correlating to Figure 10):

- Load a frame from the camera

- Store the frame in RAM

- Read the frame from RAM

- Convert the raw image to RGB, planer RGB, and stores the result in RAM

- The Mi-V soft RISC-V processor starts the CoreVectorBlox engine, which retrieves the image from RAM, performs inference, and stores the classification probability results back to RAM

- The Mi-V uses the results to create an overlay frame with bounding boxes, classification results, and other meta data and stores the frame in RAM

- The original frame is blended with the overlay frame and written to the HDMI display

The demonstration supports acceleration of Tiny Yolo V3 and MobileNet V2 models, but developers can run other SDK supported models using the methods described earlier by making a small code change to add the model name and metadata to the existing list containing the two default models.

Conclusion

AI algorithms such as NN models typically impose compute-intensive workloads that require more robust computing resources than available with general purpose processors. While FPGAs are well equipped to meet the performance and low power requirements of inference model execution, conventional FPGA development methods can seem complex, often causing developers to turn to sub-optimal solutions.

As shown, using specialized IP and software from Microchip Technology, developers without FPGA experience can implement inference-based designs better able to meet performance, power, as well as design schedule requirements.

Disclaimer: The opinions, beliefs, and viewpoints expressed by the various authors and/or forum participants on this website do not necessarily reflect the opinions, beliefs, and viewpoints of DigiKey or official policies of DigiKey.