Get Started Quickly With 3D Time-of-Flight Applications

Contributed By DigiKey's North American Editors

2020-03-25

3D time-of-flight (ToF) imaging offers an efficient alternative to video imaging for a broad range of applications including industrial safety, robotic navigation, gesture control interfaces, and much more. This approach does, however, require a careful blend of optical design, precision timing circuits, and signal processing capabilities that can often leave developers struggling to implement an effective 3D ToF platform.

This article will describe the nuances of ToF technology before showing how two off-the-shelf 3D ToF kits—Analog Devices’ AD-96TOF1-EBZ development platform and ESPROS Photonics’ EPC660 evaluation kit—can help developers quickly prototype 3D ToF applications and gain needed experience to implement 3D ToF designs to meet their unique requirements.

What is ToF technology?

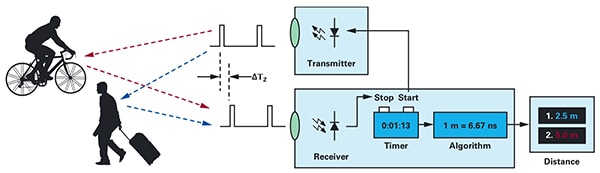

ToF technology relies on the familiar principle that the distance between an object and some source point can be found by measuring the difference between the time that energy is transmitted by the source and the time that its reflection is received by the source (Figure 1).

Figure 1: ToF systems calculate the distance between the system and external objects by measuring the time delay between energy transmission and the system's reception of energy reflected by an object. (Image source: Analog Devices)

Figure 1: ToF systems calculate the distance between the system and external objects by measuring the time delay between energy transmission and the system's reception of energy reflected by an object. (Image source: Analog Devices)

Although the basic principle remains the same, ToF solutions vary widely and bear the capabilities and limitations inherent in their underlying technologies including ultrasound, light detection and ranging (LiDAR), cameras, and millimeter wave (mmWave) RF signals:

- Ultrasonic ToF solutions offer a low-cost solution but with limited range and spatial resolution of objects

- Optical ToF solutions can achieve greater range and spatial resolution than ultrasonic systems but are compromised by heavy fog or smoke

- Solutions based on mmWave technology are typically more complex and expensive, but they can operate at significant range while providing information about the target object's velocity and heading despite smoke, fog, or rain

Manufacturers take advantage of the capabilities of each technology as needed to meet specific requirements. For example, ultrasonic sensors are well suited for detecting obstructions as robots move across a path or as drivers park their vehicles. In contrast, mmWave technology provides vehicles with the kind of long-distance sensing capability needed to detect approaching road hazards even when other sensors are unable to penetrate heavy weather conditions.

ToF designs can be built around a single transmitter/receiver pair. For example, a simple optical ToF design conceptually requires only an LED to illuminate some region of interest and a photodiode to detect reflections from objects within that region of interest. This seemingly simple design nevertheless requires precise timing and synchronization circuits to measure the delay. In addition, modulation and demodulation circuits may be needed to differentiate the illumination signal from background sources or support more complex continuous wave methods.

Design complexity rises quickly as developers work to enhance the signal to noise ratio (SNR) and eliminate artifacts in ToF systems. Further compounding complexity, more advanced detection solutions will employ multiple transmitters and receivers to track multiple objects or support more sophisticated motion tracking algorithms. For example, mmWave systems will often employ multiple receivers to track the heading and velocity of multiple independent objects. (See, "Use Millimeter Wave Radar Kits for Fast Development of Precision Object Detection Designs".)

3D optical ToF systems

3D optical ToF systems extend the idea of using more receivers by using imaging sensors typically based on an array of charge-coupled devices (CCDs). When a set of lenses focuses some region of interest onto the CCD array, each charge storage device in the CCD array is charged by the return illumination reflected from a corresponding point in that region of interest. Synchronized with pulsed or continuous wave illumination, reflected light reaching the CCD array is essentially captured in a sequence of windows or phases, respectively. This data is further processed to create a 3D depth map comprising voxels (VOlume piXELs) whose value represents the distance to the corresponding point in the region of interest.

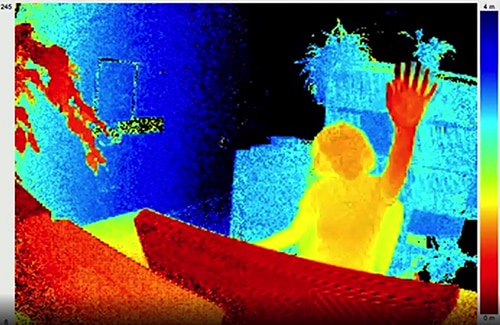

Like frames in a video, individual depth maps can be captured in sequence to provide measurements with temporal resolution limited only by the frame rate of the image capture system and with spatial resolution limited only by the CCD array and optical system. With the availability of larger 320 x 240 CCD imagers, higher resolution 3D optical ToF systems find applications in broadly diverse segments including industrial automation, unmanned aerial vehicles (UAVs), and even gesture interfaces (Figure 2).

Figure 2: With their high frame rate and spatial resolution, 3D optical ToF can provide gesture interface systems with detailed data such as a person's hand being raised toward the ToF camera as shown here. (Image source: ESPROS Photonics)

Figure 2: With their high frame rate and spatial resolution, 3D optical ToF can provide gesture interface systems with detailed data such as a person's hand being raised toward the ToF camera as shown here. (Image source: ESPROS Photonics)

Unlike most camera-based methods, 3D ToF systems can provide accurate results despite shading or changing lighting conditions. These systems provide their own illumination, typically using lasers or high-power infrared LEDs such as Lumileds' Luxeon IR LEDs able to operate at the megahertz (MHz) switching rates used in these systems. Unlike methods such as stereoscopic cameras, 3D ToF systems provide a compact solution for generating detailed distance information.

Pre-built solutions

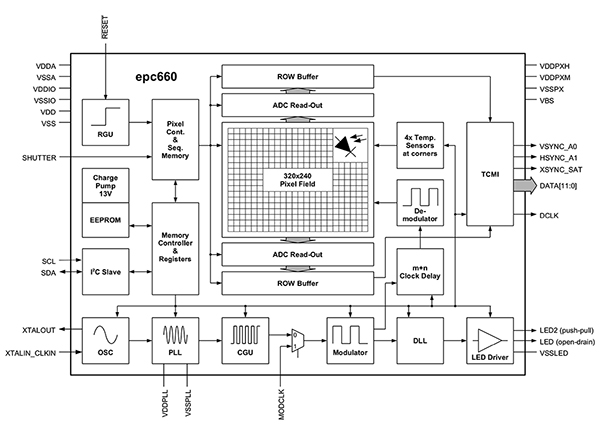

To implement 3D ToF systems, however, developers face multiple design challenges. Besides the timing circuits mentioned earlier, these systems depend on a carefully designed signal processing pipeline optimized to rapidly read results from the CCD array for each window or phase measurement, and then complete the processing required to turn that raw data into depth maps. Advanced 3D ToF imagers such as ESPROS Photonics' EPC660-CSP68-007 ToF imager combine a 320 x 240 CCD array with the full complement of timing and signal processing capabilities required to perform 3D ToF measurements and provide 12-bit distance data per pixel (Figure 3).

Figure 3: The ESPROS Photonics epc660 integrates a 320 x 240 pixel imager with a full complement of timing circuits and controllers required to convert raw imager data into depth maps. (Image source: ESPROS Photonics)

Figure 3: The ESPROS Photonics epc660 integrates a 320 x 240 pixel imager with a full complement of timing circuits and controllers required to convert raw imager data into depth maps. (Image source: ESPROS Photonics)

ESPROS Photonics’ EPC660-007 card-edge connector chip carrier mounts the epc650 imager on a 37.25 x 36.00 millimeter (mm) printed circuit board (pc board) complete with decoupling capacitors and card edge connector. Although this chip carrier addresses the basic hardware interface in a 3D ToF system design, developers are left with the tasks of completing the appropriate optical design on the front end and providing processing resources on the backend. ESPROS Photonics’ epc660 evaluation kit eliminates these tasks by providing a full 3D ToF application development environment that includes a pre-built 3D ToF imaging system and associated software (Figure 4).

Figure 4: The ESPROS Photonics’ epc660 evaluation kit provides a pre-built 3D ToF camera system and associated software for using depth information in applications. (Image source: ESPROS Photonics)

Figure 4: The ESPROS Photonics’ epc660 evaluation kit provides a pre-built 3D ToF camera system and associated software for using depth information in applications. (Image source: ESPROS Photonics)

Designed for evaluation and rapid prototyping, the ESPROS kit provides a pre-assembled camera system that combines the epc660 CC chip carrier, optical lens assembly, and a set of eight LEDs. Along with the camera system, a BeagleBone Black processor board with 512 megabytes (Mbytes) of RAM and 4 gigabytes (Gbytes) of flash serves as the host controller and application processing resource.

ESPROS also provides epc660 eval kit support software that can be downloaded from its website and opened with a password that can be requested from the company’s local sales office. After gaining access to the software, developers simply run a graphical user interface (GUI) application with one of several provided configuration files to begin operating the camera system. The GUI application also provides control and display windows for setting additional parameters including spatial and temporal filter settings and finally for viewing the results. With minimal effort developers can use the kit to begin capturing depth maps in real time and use them as input to their own applications software.

Enhanced resolution 3D ToF systems

A 320 x 240 imager such as the ESPROS epc660 can serve many applications but may lack the resolution required to detect small movements in gesture interfaces or to distinguish small objects without severely restricting the range of interest. For these applications, the availability of ready-made development kits based on 640 x 480 ToF sensors enables developers to quickly prototype high resolution applications.

Seeed Technology's DepthEye Turbo depth camera integrates a 640 x 480 ToF sensor, four 850 nanometer (nm) vertical-cavity surface-emitting laser (VCSEL) diodes, illumination and sensing operating circuitry, power, and USB interface support in a self-contained cube measuring 57 x 57 x 51 mm. Software support is provided through an open-source libPointCloud SDK github repository with support for Linux, Windows, Mac OS, and Android platforms.

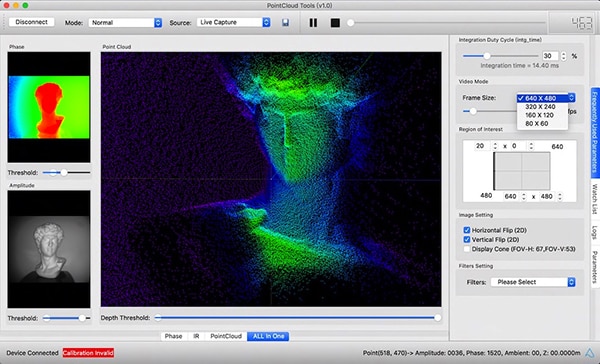

Along with C++ drivers, libraries and sample code, the libPointCloud SDK distribution includes a Python API for rapid prototyping as well as a visualization tool. After installing the distribution package on their host development platform, developers can connect the camera via USB to their computer and immediately begin using the visualization tool to display phase, amplitude, or point cloud maps, which are essentially enhanced depth maps rendered with texture surfaces to provide a smoother 3D image (Figure 5).

Figure 5: Used in combination with the Seeed Technology DepthEye Turbo depth camera, the associated software package enables developers to easily visualize 3D ToF data in a variety of renderings including point clouds as shown here in the main window pane. (Image source: Seeed Technology/PointCloud.AI)

Figure 5: Used in combination with the Seeed Technology DepthEye Turbo depth camera, the associated software package enables developers to easily visualize 3D ToF data in a variety of renderings including point clouds as shown here in the main window pane. (Image source: Seeed Technology/PointCloud.AI)

Analog Devices' AD-96TOF1-EBZ 3D ToF evaluation kit provides a more open hardware design built with a pair of boards and designed to use Raspberry Pi's Raspberry Pi 3 Model B+ or Raspberry Pi 4 as the host controller and local processing resource (Figure 6).

Figure 6: The Analog Devices AD-96TOF1-EBZ 3D ToF evaluation kit combines a two-board assembly for illumination and data acquisition with a Raspberry Pi board for local processing. (Image source: Analog Devices)

Figure 6: The Analog Devices AD-96TOF1-EBZ 3D ToF evaluation kit combines a two-board assembly for illumination and data acquisition with a Raspberry Pi board for local processing. (Image source: Analog Devices)

The kit's analog front-end (AFE) board holds the optical assembly, CCD array and buffers, firmware storage, and a processor that manages overall camera operation including illumination timing, sensor synchronization, and depth map generation. The second board holds four 850 nm VCSEL laser diodes and drivers and is designed to connect to the AFE board so that the laser diodes surround the optical assembly as shown in the figure above.

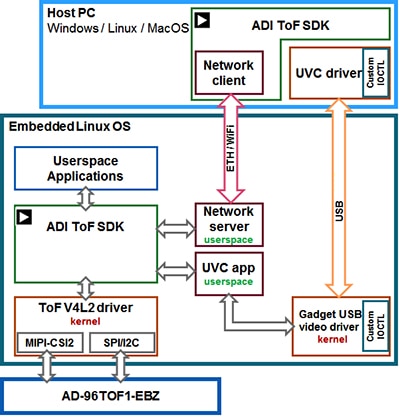

Analog Devices supports the AD-96TOF1-EBZ kit with its open-source 3D ToF software suite featuring the 3D ToF SDK along with sample code and wrappers for C/C++, Python, and Matlab. To support both host applications and low-level hardware interactions in a networked environment, Analog Devices splits the SDK into a host partition optimized for USB and network connectivity, and a low-level partition running on Embedded Linux and built on top of a Video4Linux2 (V4L2) driver (Figure 7).

Figure 7: The Analog Devices 3D ToF SDK API supports applications running on the local Embedded Linux host and applications running remotely on networked hosts. (Image source: Analog Devices)

Figure 7: The Analog Devices 3D ToF SDK API supports applications running on the local Embedded Linux host and applications running remotely on networked hosts. (Image source: Analog Devices)

This network-enabled SDK allows applications running on network connected hosts to work remotely with a ToF hardware system to access the camera and capture depth data. User programs can also run in the Embedded Linux partition and take full advantage of advanced options available at that level.

As part of the software distribution, Analog Devices provides sample code demonstrating key low-level operational capabilities such as camera initialization, basic frame capture, remote access, and cross-platform capture on a host computer and locally with Embedded Linux. Additional sample applications build on these basic operations to illustrate the use of captured data in higher level applications such as point cloud generation. In fact, a sample application demonstrates how a deep neural network (DNN) inference model can be used to classify data generated by the camera system. Written in Python, this DNN sample application (dnn.py) shows each step of the process required to acquire data and prepare its classification by the inference model (Listing 1).

Copy

import aditofpython as tof

import numpy as np

import cv2 as cv

. . .

try:

net = cv.dnn.readNetFromCaffe(args.prototxt, args.weights)

except:

print("Error: Please give the correct location of the prototxt and caffemodel")

sys.exit(1)

swapRB = False

classNames = {0: 'background',

1: 'aeroplane', 2: 'bicycle', 3: 'bird', 4: 'boat',

5: 'bottle', 6: 'bus', 7: 'car', 8: 'cat', 9: 'chair',

10: 'cow', 11: 'diningtable', 12: 'dog', 13: 'horse',

14: 'motorbike', 15: 'person', 16: 'pottedplant',

17: 'sheep', 18: 'sofa', 19: 'train', 20: 'tvmonitor'}

system = tof.System()

status = system.initialize()

if not status:

print("system.initialize() failed with status: ", status)

cameras = []

status = system.getCameraList(cameras)

. . .

while True:

# Capture frame-by-frame

status = cameras[0].requestFrame(frame)

if not status:

print("cameras[0].requestFrame() failed with status: ", status)

depth_map = np.array(frame.getData(tof.FrameDataType.Depth), dtype="uint16", copy=False)

ir_map = np.array(frame.getData(tof.FrameDataType.IR), dtype="uint16", copy=False)

# Creation of the IR image

ir_map = ir_map[0: int(ir_map.shape[0] / 2), :]

ir_map = np.float32(ir_map)

distance_scale_ir = 255.0 / camera_range

ir_map = distance_scale_ir * ir_map

ir_map = np.uint8(ir_map)

ir_map = cv.cvtColor(ir_map, cv.COLOR_GRAY2RGB)

# Creation of the Depth image

new_shape = (int(depth_map.shape[0] / 2), depth_map.shape[1])

depth_map = np.resize(depth_map, new_shape)

distance_map = depth_map

depth_map = np.float32(depth_map)

distance_scale = 255.0 / camera_range

depth_map = distance_scale * depth_map

depth_map = np.uint8(depth_map)

depth_map = cv.applyColorMap(depth_map, cv.COLORMAP_RAINBOW)

# Combine depth and IR for more accurate results

result = cv.addWeighted(ir_map, 0.4, depth_map, 0.6, 0)

# Start the computations for object detection using DNN

blob = cv.dnn.blobFromImage(result, inScaleFactor, (inWidth, inHeight), (meanVal, meanVal, meanVal), swapRB)

net.setInput(blob)

detections = net.forward()

. . .

for i in range(detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > thr:

class_id = int(detections[0, 0, i, 1])

. . .

if class_id in classNames:

value_x = int(center[0])

value_y = int(center[1])

label = classNames[class_id] + ": " + \

"{0:.3f}".format(distance_map[value_x, value_y] / 1000.0 * 0.3) + " " + "meters"

. . .

# Show image with object detection

cv.namedWindow(WINDOW_NAME, cv.WINDOW_AUTOSIZE)

cv.imshow(WINDOW_NAME, result)

# Show Depth map

cv.namedWindow(WINDOW_NAME_DEPTH, cv.WINDOW_AUTOSIZE)

cv.imshow(WINDOW_NAME_DEPTH, depth_map)

Listing 1: This snippet from a sample application in the Analog Devices 3D ToF SDK distribution demonstrates the few steps required to acquire depth and IR images and classify them with an inference model. (Code source: Analog Devices)

Here, the process begins by using OpenCV's DNN methods (cv.dnn.readNetFromCaffe) to read the network and associated weights for an existing inference model. In this case, the model is a Caffe implementation of the Google MobileNet Single Shot Detector (SSD) detection network known for achieving high accuracy with relatively small model sizes. After loading the class names with the supported class identifiers and class labels, the sample application identifies the available cameras and executes a series of initialization routines (not shown in Listing 1).

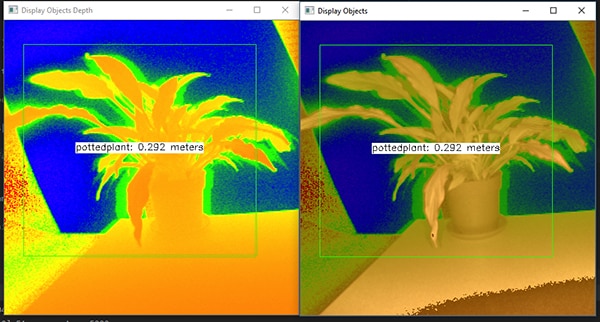

The bulk of the sample code deals with preparing the depth map (depth_map) and IR map (ir_map) before combining them (cv.addWeighted) into a single array to enhance accuracy. Finally, the code calls another OpenCV DNN method (cv.dnn.blobFromImage) which converts the combined image into the four-dimensional blob data type required for inference. The next line of code sets the resulting blob as the input to the inference model (net.setInput(blob)). The call to net.forward() invokes the inference model which returns the classification results. The remainder of the sample application identifies classification results that exceed a preset threshold and generates for those a label and bounding box displaying the captured image data, the label identified by the inference model, and its distance from the camera (Figure 8).

Figure 8: Using a few lines of Python code and the OpenCV library, the DNN sample application in Analog Devices’ 3D ToF SDK distribution captures depth images, classifies them, and displays the identified object's label and distance. (Image source: Analog Devices)

Figure 8: Using a few lines of Python code and the OpenCV library, the DNN sample application in Analog Devices’ 3D ToF SDK distribution captures depth images, classifies them, and displays the identified object's label and distance. (Image source: Analog Devices)

As Analog Devices’ DNN sample application demonstrates, developers can use 3D ToF depth maps in combination with machine learning methods to create more sophisticated application features. Although applications that require low latency responses will more likely build these features with C/C++, the basic steps remain the same.

Using 3D ToF data and high performance inference models, industrial robotic systems can more safely synchronize their movements with other equipment or even with humans in "cobot" environments where humans and robots work cooperatively in close proximity. With different inference models, another application can use a high-resolution 3D ToF camera to classify fine movements for a gesture interface. In automotive applications, this same approach can help improve the accuracy of advanced driver-assistance systems (ADAS), taking full advantage of the high temporal and spatial resolution available with 3D ToF systems.

Conclusion

ToF technologies play a key role in nearly any system that depends critically on accurate measurement of distance between the system and other objects. Among ToF technologies, optical 3D ToF can provide both high spatial resolution and high temporal resolution, enabling finer distinction between smaller objects and more precise monitoring of their relative distance.

To take advantage of this technology, however, developers have needed to deal with multiple challenges associated with optical design, precision timing, and synchronized signal acquisition of these systems. As shown, the availability of pre-built 3D ToF systems, such as Analog Devices’ AD-96TOF1-EBZ development platform and ESPROS Photonics’ EPC660 evaluation kit, removes these barriers to application of this technology in industrial systems, gesture interfaces, automotive safety systems, and more.

Disclaimer: The opinions, beliefs, and viewpoints expressed by the various authors and/or forum participants on this website do not necessarily reflect the opinions, beliefs, and viewpoints of DigiKey or official policies of DigiKey.